Page 80 - B.E CSE Curriculum and Syllabus R2017 - REC

P. 80

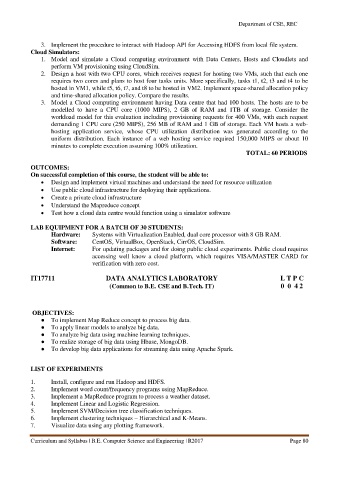

Department of CSE, REC

3. Implement the procedure to interact with Hadoop API for Accessing HDFS from local file system.

Cloud Simulators:

1. Model and simulate a Cloud computing environment with Data Centers, Hosts and Cloudlets and

perform VM provisioning using CloudSim.

2. Design a host with two CPU cores, which receives request for hosting two VMs, such that each one

requires two cores and plans to host four tasks units. More specifically, tasks t1, t2, t3 and t4 to be

hosted in VM1, while t5, t6, t7, and t8 to be hosted in VM2. Implement space-shared allocation policy

and time-shared allocation policy. Compare the results.

3. Model a Cloud computing environment having Data centre that had 100 hosts. The hosts are to be

modelled to have a CPU core (1000 MIPS), 2 GB of RAM and 1TB of storage. Consider the

workload model for this evaluation including provisioning requests for 400 VMs, with each request

demanding 1 CPU core (250 MIPS), 256 MB of RAM and 1 GB of storage. Each VM hosts a web-

hosting application service, whose CPU utilization distribution was generated according to the

uniform distribution. Each instance of a web hosting service required 150,000 MIPS or about 10

minutes to complete execution assuming 100% utilization.

TOTAL: 60 PERIODS

OUTCOMES:

On successful completion of this course, the student will be able to:

Design and implement virtual machines and understand the need for resource utilization

Use public cloud infrastructure for deploying their applications.

Create a private cloud infrastructure

Understand the Mapreduce concept

Test how a cloud data centre would function using a simulator software

LAB EQUIPMENT FOR A BATCH OF 30 STUDENTS:

Hardware: Systems with Virtualization Enabled, dual core processor with 8 GB RAM.

Software: CentOS, VirtualBox, OpenStack, CirrOS, CloudSim.

Internet: For updating packages and for doing public cloud experiments. Public cloud requires

accessing well know a cloud platform, which requires VISA/MASTER CARD for

verification with zero cost.

IT17711 DATA ANALYTICS LABORATORY L T P C

(Common to B.E. CSE and B.Tech. IT) 0 0 4 2

OBJECTIVES:

● To implement Map Reduce concept to process big data.

● To apply linear models to analyze big data.

● To analyze big data using machine learning techniques.

● To realize storage of big data using Hbase, MongoDB.

● To develop big data applications for streaming data using Apache Spark.

LIST OF EXPERIMENTS

1. Install, configure and run Hadoop and HDFS.

2. Implement word count/frequency programs using MapReduce.

3. Implement a MapReduce program to process a weather dataset.

4. Implement Linear and Logistic Regression.

5. Implement SVM/Decision tree classification techniques.

6. Implement clustering techniques – Hierarchical and K-Means.

7. Visualize data using any plotting framework.

Curriculum and Syllabus | B.E. Computer Science and Engineering | R2017 Page 80