Page 93 - B.E CSE Curriculum and Syllabus R2017 - REC

P. 93

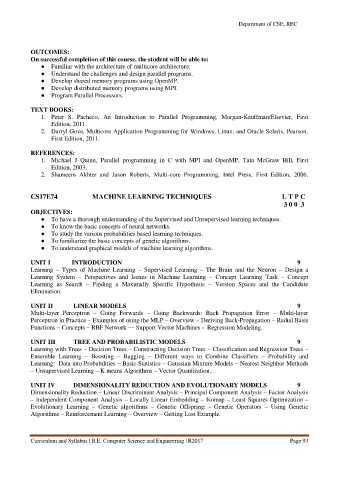

Department of CSE, REC

OUTCOMES:

On successful completion of this course, the student will be able to:

● Familiar with the architecture of multicore architecture.

● Understand the challenges and design parallel programs.

● Develop shared memory programs using OpenMP.

● Develop distributed memory programs using MPI.

● Program Parallel Processors.

TEXT BOOKS:

1. Peter S. Pacheco, An Introduction to Parallel Programming, Morgan-Kauffman/Elsevier, First

Edition, 2011.

2. Darryl Gove, Multicore Application Programming for Windows, Linux, and Oracle Solaris, Pearson,

First Edition, 2011.

REFERENCES:

1. Michael J Quinn, Parallel programming in C with MPI and OpenMP, Tata McGraw Hill, First

Edition, 2003.

2. Shameem Akhter and Jason Roberts, Multi-core Programming, Intel Press, First Edition, 2006.

CS17E74 MACHINE LEARNING TECHNIQUES L T P C

3 0 0 3

OBJECTIVES:

● To have a thorough understanding of the Supervised and Unsupervised learning techniques.

● To know the basic concepts of neural networks.

● To study the various probabilities based learning techniques.

● To familiarize the basic concepts of genetic algorithms.

● To understand graphical models of machine learning algorithms.

UNIT I INTRODUCTION 9

Learning – Types of Machine Learning – Supervised Learning – The Brain and the Neuron – Design a

Learning System – Perspectives and Issues in Machine Learning – Concept Learning Task – Concept

Learning as Search – Finding a Maximally Specific Hypothesis – Version Spaces and the Candidate

Elimination.

UNIT II LINEAR MODELS 9

Multi-layer Perceptron – Going Forwards – Going Backwards: Back Propagation Error – Multi-layer

Perceptron in Practice – Examples of using the MLP – Overview – Deriving Back-Propagation – Radial Basis

Functions – Concepts – RBF Network –– Support Vector Machines - Regression Modeling.

UNIT III TREE AND PROBABILISTIC MODELS 9

Learning with Trees – Decision Trees – Constructing Decision Trees – Classification and Regression Trees –

Ensemble Learning – Boosting – Bagging – Different ways to Combine Classifiers – Probability and

Learning: Data into Probabilities – Basic Statistics – Gaussian Mixture Models – Nearest Neighbor Methods

– Unsupervised Learning – K means Algorithms – Vector Quantization.

UNIT IV DIMENSIONALITY REDUCTION AND EVOLUTIONARY MODELS 9

Dimensionality Reduction – Linear Discriminant Analysis – Principal Component Analysis – Factor Analysis

– Independent Component Analysis – Locally Linear Embedding – Isomap – Least Squares Optimization –

Evolutionary Learning – Genetic algorithms – Genetic Offspring: - Genetic Operators – Using Genetic

Algorithms – Reinforcement Learning – Overview – Getting Lost Example.

Curriculum and Syllabus | B.E. Computer Science and Engineering | R2017 Page 93